This tiny microscope implanted in the brain could restore sight to the blind

Microscopes are incredibly powerful, but they aren’t always the best device to study, say, what’s happening within individual brain cells doing real-life tasks. They’re just too clunky compared to the number of cells they magnify.

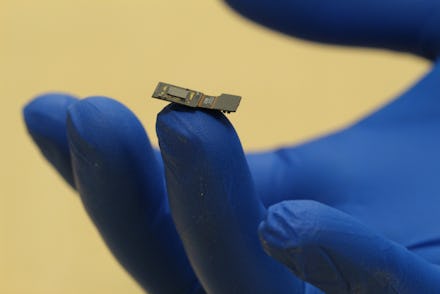

So how about a microscope that weighs about 0.2 grams, about one-five-thousandth the size of a traditional microscope?

That’s what engineers at Rice University are creating with a project called FlatScope. And they’re aiming to make the technology human-ready, thanks to an ambitious initiative funded by the military’s Defense Advanced Research Projects Agency.

The DARPA initiative encourages scientists to create an implant that can translate brain signals into computer signals and vice versa. The Rice project is just part of that larger initiative and will rely on other DARPA-funded research to reach its full potential.

But assuming all those pieces come together, the Rice team thinks it will have a device ready that could allow neuroscientists to see the brain at work as never before. It could even restore sight.

Glow-in-the-dark neurons

To understand how it works, first we need to understand another project within the larger initiative. Run out of Yale University, it’s working on finding a way to make human neurons light up when they’re doing something. That process has already been developed in mice through genetic engineering, but translating that to humans is trickier. A second related process does the reverse — so when you shine a light on a neuron, it fires.

The FlatScope would sit on a section of the visual cortex and look for flashes of light — from about a million neurons.

That’s way more brain cells than any existing technology can watch, said Jacob Robinson, a computer engineer at Rice working on the project. “I think a million really represents a paradigm shift,” he said. “It forces us to really think outside the box in developing this technology.”

Algorithms convert that pattern of flashing neurons into an image. That’s inspired by work members of the team have done on a project called FlatCam, a lens-less camera about the thickness of a dime. But where that project worked in two dimensions, FlatScope has to work in three to see how neurons interact. “The secret sauce is really in the computation,” said Caleb Kemere, a neuroscientist on the project.

Turning an idea into reality

The team has already built a prototype of the device. So far, they’ve only used it to look at manufactured fluorescing samples, and it still relies on wires for power and to submit its data. But it can already zoom into about the scale needed to look at individual neurons, and it can keep pace with the brain’s activity, according to Ashok Veeraraghavan, a computational imaging specialist on the project.

Next, the team will be using the system in mice that can move about freely. It will take longer to tackle the range of challenges facing human use — that requires not just the Rice team confirming all their materials are safe for long-term use, but also separate teams finding a way to make the neurons fluoresce and to power the microscope and download its data wirelessly. But the timeline is still aggressive — they want to be ready for human trials within four years.

Watching the brain isn’t just interesting in and of itself, Kemere explained — recently scientists have realized that how neurons interact is based on context. The signal for a specific image will look different whether you’re relaxed or alarmed, focused or drifting.

So any team looking to project an image into a brain will first need to have watched lots and lots of different neural responses under different conditions. Once scientists know how neurons act processing visual cues, they should be able to flash light on individual neurons to create the pattern that matches the image they want the person to see — to restore sight to the blind.

But focusing on watching the brain is the crucial first step, and would always underlie any sight restoration initiatives, since the implant would always need to be watching its brain to understand its current status.