Twitter has introduced a bad vibes warning

You’ll now get a pop-up warning that “conversations like this can be intense.”

Twitter wants to do a quick vibe check. After briefly becoming the internet’s platform of choice this week when Facebook and all its offshoots went completely offline for a day, Twitter announced Wednesday that it will be launching yet another new effort to try to keep things civil.

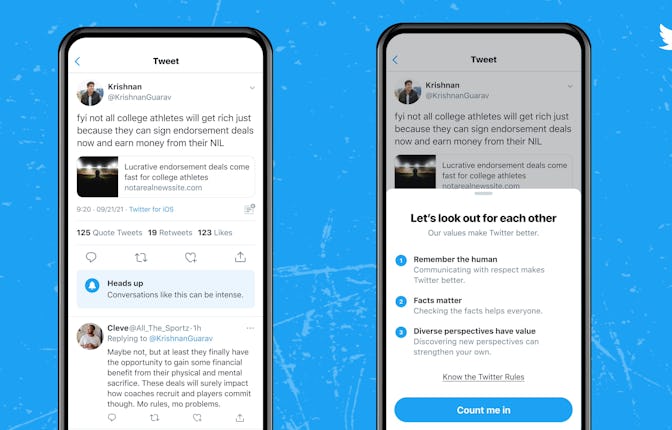

The feature, Twitter’s latest in an attempt to make conversation healthier on its hell app, will hit users with a warning that will appear below tweets that are receiving lots of engagement. “Heads up,” the message reads. “Conversations like this can be intense.” Twitter will also hit people with a pop-up message that basically is a desperate plea for civility: “ Let’s look out for each other,” it asks, with reminders to “remember the human,” “facts matter,” and “diverse perspectives have value.” Users will have to acknowledge these messages by tapping the “Count me in” button before resuming their regularly scheduled hate speech.

This is not Twitter’s first attempt at improving the discourse. The company has previously rolled out prompts that have become standard within the app — meaning you’ve probably already gotten good at ignoring them. For example, last year, Twitter rolled out a prompt that asks people to open an article before retweeting it in an effort to make sure people are reading past the headline before sharing.

Skeptical, we at Mic asked Twitter just how helpful these friendly little pop-ups are. The company claimed that people opening articles before sharing retweeting them has increased by 33% since they introduced the prompt. Still, it’s hard to know just how much actual reading is happening or if people are opening the link and immediately closing it to make the warning disappear. The data does suggest that one in three people feel guilty about not reading the article, though, so at least that’s something.

Another prompt that Twitter introduced earlier this year asks people to ditch harmful words before hitting the Tweet button. If the app sees that you’re about to send an insult, strong language, or hateful remarks, it will ask you to review the tweet for “potentially harmful or offensive language.” While it won’t stop you from actually hitting send, it is enough of an inconvenience for absolute weirdos to complain about censorship, which frankly makes it a successful feature already. Twitter told Mic that 34% of people revised their reply or decided not to send it entirely once receiving the prompt. “After being prompted once, people composed, on average, 11% fewer offensive replies in the future,” a spokesperson for Twitter said.

Notably, Twitter didn’t elaborate on how they’re measuring all this. Does Twitter have Precogs on staff who can predict how hateful someone would be without a virtuous warning message from their website? Are they comparing the decrease in toxicity from reasonable people to the hate-laced babble of Twitter eggs who probably receive the warning as a dare to include more slurs? Do people who get banned for hate speech count as being less offensive because they can’t tweet anymore? Sure, maybe the prompts are doing something. It’s just that Twitter’s data seems like the most friendly interpretation of how effective these features could be; Twitter has reviewed the data and has determined that Twitter is doing a great job.

Early returns on Twitter’s vibe check are mixed. Users have reported seeing the warning of “intense” conversations pop up in the replies of tweets talking about politics and vaccine mandates, which, fair. But it’s also cropped up in more innocuous places, like discussions about open-source algorithms, or even the dreaded Bad Art Friend story. Then again, every conversation on Twitter has the distinct possibility of turning into an absolute shit show, so, maybe Twitter is onto something here.