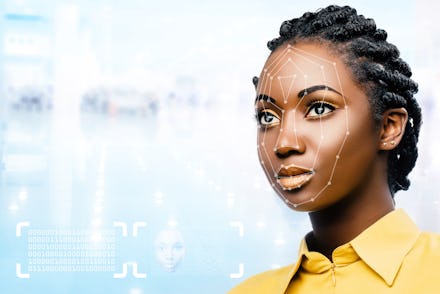

The questionable way Google tried to fix facial recognition's racial blind spot

With criticism of facial recognition resulting in bans across the country, companies are looking to improve the technology. However, the New York Daily News recently found that Google's attempts to improve facial recognition resulted in questionable methods targeting 'dark-skinned' people to collect facial data. These revelations from subcontracted workers, also known as temps, vendors, and contractors (TVCs) at Google, open up questions about whether or not "inclusion" in datasets should be the name of the game.

In July, Google confirmed employees were collecting facial data to improve the Pixel 4's face unlock system. Across the country, TVCs employed by staffing firm Randstad offered people $5 gift cards to scan their face. Improving facial recognition for the purpose of unlocking phones isn't terrible by itself, but Google's methodology leaves a lot to be desired.

Giving people only $5 to collect biometric information is questionable enough but that's not the end of it. According to several people familiar with the project, Randstad directed TVCs to "go after people of color, conceal the fact that people’s faces were being recorded and even lie to maximize their data collections."

One former employee told the New York Daily News that Randstad sent a team to Atlanta to "specifically" target Black people, including those who were homeless.

Although Randstad was in charge of day-to-day operations, the report indicates that Google managers were not completely unaware. Google managers took part in conference calls and were "involved in many aspects of the data collection and review, including the mandate to go after 'darker skin tones'."

Research has shown that facial recognition struggles to read darker-skin and Black people especially. In July 2018, the American Civil Liberties Union tested Amazon's facial recognition program, Rekognition. The program falsely matched 28 members of Congress to mugshots — including six members of the Congressional Black Caucus. Then, a January 2019 study at M.I.T. Media Lab found that Rekognition had greater errors in recognizing darker-skinned women.

During a House hearing where advocates requested Congress place a moratorium on facial recognition, Joy Buolamwini, founder of the Algorithmic Justice League, testified on her research:

“In one test, Amazon Rekognition even failed on the face of Oprah Winfrey labeling her male. Personally, I’ve had to resort to literally wearing a white mask to have my face detected by some of this technology. Coding in white face is the last thing I expected to be doing at M.I.T., an American epicenter of innovation.”

In the past, Google has had issues with its technology even recognizing Black people as human. Back in 2015, recognition algorithms in Google Photos classified Black people as gorillas. The company's resolution was to simply stop its program from identifying gorillas at all.

With a history of its own programs literally labeling Black people as animals, Google contractors relying on dehumanizing methods to collect facial data shows that tech has severe problems in how it conceptualizes and interacts with Blackness. A method of collection that relies on a lack of informed consent should be scrutinized.

Including Black people in datasets doesn't respond to concerns around how facial recognition contributes to surveillance and will endanger communities of color. This report shows that even inclusion can be conducted in dubious ways.