This trash-talking robot knows exactly how to hurt you

Trash talk can make even the most confident among us lose their chill. Recent research underscores just how powerful these fighting words can be. A study presented at a conference on Robot & Human Interactive Communication in New Delhi in October found that trash talk trips people up, even if a it's coming from robot.

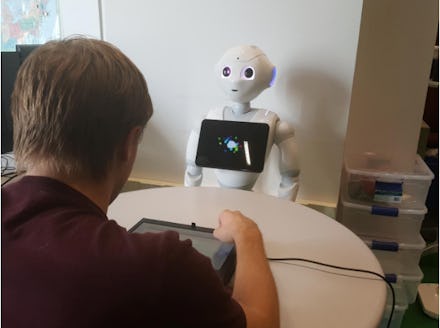

In the study, which stemmed from a student project in the Carnegie Mellon University course AI Methods for Social Good, 40 participants played a robot in a game often used to research rationality, according to a university statement. The robot was Softbank Robotics’ Pepper, a wide-eyed, four-foot-tall humanoid personal assistant found in retail, hospitality, healthcare, and other settings.

Over the course of 35 games, Pepper either trash talked the participants or gassed them up. The robot’s trash talk was pretty tame by locker room standards (with subtle shade, like “I have to say you are a terrible player”), and the participants assigned to get insulted knew they shouldn’t take a machine’s words personally. "One participant said, 'I don't like what the robot is saying, but that's the way it was programmed so I can't blame it,'" said Aaron Roth, the study’s lead author, said in the statement.

Still, the trash talk seemed to get under their skin. Indeed, while all participants’ performance improved with every game they played, those who Pepper criticized didn’t score as well as those it encouraged.

As anyone who’s received a flurry of “You got this!” texts from friends and family before an exam or job interview knows firsthand, people’s comments can have a real impact on our performance. There’s also plenty of research evidence of this phenomenon, including a recent study which found that Mario Kart players’ performance suffered when their opponents trashed talked them, the BBC reports.

The CMU study shows that robots’ comments can have similar effects, which has implications for their use as companions, as well as in mental health and educational settings, Afsaneh Doryan, a researcher at CMU at the time of the study, said in the statement.

Fei Fang, an assistant professor at CMU who taught the course from which the research emerged, noted that the study was one of the first to look at robots that aren’t cooperating, according to the statement. Its findings could have relevance in situations like online shopping, where Alexa and other home assistants’ goals may differ from ours. While we seem most concerned about robots taking our jobs, we might need worry about them insulting us, too.