This AI can "read" your mind by looking at your brain scans

Mind reading could be possible in the future, although it probably won't be the kind of direct brain-to-brain telepathy performed by superheroes.

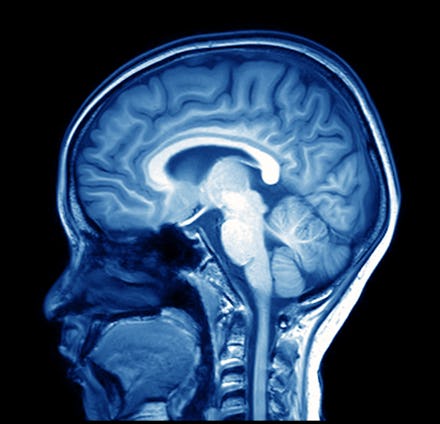

Scientists at Carnegie Mellon University read human thoughts by combining photos of the brain collected from MRI devices with machine learning algorithms. Machine learning is a subset of artificial intelligence that allows machines to learn from data themselves, without being explicitly programmed.

Here's how it works

The scientists' machine learning algorithms help distinguish "building blocks" for human thoughts. These building blocks then form patterns, and scientists are sometimes able to interpret those patterns as complete sentences.

“One of the big advances of the human brain was the ability to combine individual concepts into complex thoughts, to think not just of ‘bananas,’ but ‘I like to eat bananas in evening with my friends,'” Marcel Just, a psychology professor at Carnegie Mellon University and the study's lead author, said in a press release.

Everyday objects such as a banana "evoke activation patterns that involve the neural systems we use to deal with those objects," the release said.

In other words, scientists can discern that we're thinking of bananas based on how our brains (or "activation patterns") look when we "interact" with them. We interact with a banana by holding it, biting it and looking at it, for example.

The study says that thoughts as difficult as "The witness shouted during the trial" can be interpreted using these methods.

In the future, one can only hope that this technology will be used for good, rather than dystopian evil. At the very least, it helps us better understand how our own brains work.