Deepfakes 101: Everything you need to know about the surreal technology

Deepfakes have been in the news a lot recently as the election ramps up. Privacy and security advocates are afraid of the technology's use in fraud and extortion, there’s word that deepfakes are getting easier to make, and a lot of women are the victims of non-consentual, deepfake pornography. These videos are coming during a time when the country is facing a media literacy crisis, meaning the often harmful, misleading, and incorrect information spread by deepfakes is likely to be more readily accepted by many.

However, don’t confuse deepfakes with other video edits and manipulation. The key to deepfakes is the use of A.I. and machine learning to make ultra-realistic videos. Non-deepfake edits likely involve splicing and audio syncing that can be done in regular video editing software.

Although members of both parties appear to have fully embraced video manipulation, it hasn’t quite gotten to the point of making deepfakes... yet. There is genuine concern, however, that hostile countries could create deepfakes to mislead Americans ahead of the November election.

So being aware of the threat of deepfakes and able to spot them is more important than ever. This guide will help you understand more about these videos and how to keep yourself from falling for one.

What is a deepfake?

The word deepfake comes from a combination of “deep learning” and “fake.” The deep learning part is important to note, because it’s the backbone of how deepfakes are made.

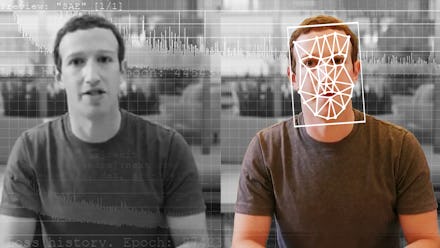

To make a deepfake, the creator needs a target — we’ll use Facebook CEO Mark Zuckerberg for our example — and machine learning architecture. This architecture uses A.I. to analyze a database of different images of the target’s face. After studying the face, it’ll eventually learn how to recreate it.

Now that it knows how the target’s face moves, it’ll know how to map a separate actor’s mouth onto that face in a convincing way. So, say we want to make a video of Zuckerberg saying something out of character. This means teaching the A.I. how human faces work, how Zuckerberg’s face works (by analyzing his images), and telling it to set an actor’s mouth in place of the real one.

Other deepfakes can be made by teaching the A.I. how a general human face works and “face swapping” one face onto another. This is an easier and quicker way to make deepfakes, but it’s also more prone to errors and inconsistencies that make it easier to spot the videos as fakes.

A deepfake can also be more convincing if the voice sounds closer to the target’s actual voice. Creators can use the same technology to create synthesized voices by feeding another A.I. a bunch of samples of someone’s voice to replicate it. This technique has already been used by thieves who stole over $200,000 from a company in September 2019 by using a synthesis of the CEO’s voice and tricking an assistant into wiring money into an account.

How to tell if a video is a deepfake

The biggest signs of weirdness are often found around the subject's mouth and jawline. It might seem blurry around the edges, or like something isn’t aligned quite right. Your uncanny valley instincts — that creepy feeling you get when something inhuman looks too freakishly human-like — might be pinging when you look at the face. It might feel like your brain is telling you something is off, but your eyes need another rewatch (or two) to figure it out.

Another tell can often be found in the mouth movements. They might not seem to fit, as if the mouth is moving too quickly or it seems too slippery — which could be the product of mismatched lighting or lip syncing.

Lighting problems might be widespread in the video if you look carefully, especially around the forehead or cheek areas. This is because the computer doesn’t always account for lighting changes that happen when people move their heads.

Let’s take a look at some examples. In an article by MIT Technology Review, you’ll see plenty of wonky, weirdly shaped heads swerving around to sing a song for a meme, like the above Obama video. These are deepfakes that anyone can make, but they’re, well, completely unconvincing. They look like what happens to your reflection when you mess around with a funhouse mirror.

On the other hand, the Zuckerberg example is pretty good. His mouth area does seem a little unusual, especially if you’ve seen a video of Zuckerberg speaking before, and the side of his neck becomes misshapen at some point during the clip. His ear also appears to blip up and down early on in the video.

With deepfake videos like the false Nixon speech from the ‘IN EVENT OF MOON DISASTER’ project, it’s much harder to tell. Image filters worked very well to hide irregularities, the creators chose a good source video to mimic, and the actor was actually trying to act. To me, the biggest tell that it’s false is a bit of awkward head movement and the robotic voice, both of which can be brushed aside by someone simply skimming and absorbing the wrong information.

Are there better ways to spot a deepfake?

Why don’t we just use computers to identify these deepfakes? Because, frankly, they still suck at spotting deepfakes. The best one to come out of a recent deepfake detection competition was only 65-82 percent accurate.

It’s tough to catch up to deepfake creation, too. Deepfakes can improve as quickly as people learn; The Guardian noted that people used to watch a subject’s eyes for a lack of blinking to tip them off to a deepfake. As soon as word got out, creators started adding blinking into their videos.

But here’s some good news: It took the directors behind the Nixon film about three months to make a six-minute video. At this time, you’re probably not going to run into many videos that are this convincing because it’d be too much effort.

Should we be alarmed over deepfakes?

The World Economic Forum has expressed concern over the potential for agitators to use deepfakes to influence elections. There’s a legitimate worry that folks will make false videos of candidates saying outrageous things that could sink their real campaigns. Facebook has already attempted to make a stand against it and Microsoft has recently released a tool to try to combat them before the election. However, despite how divisive this current election is, deepfakes of candidates hasn’t been a widespread problem.

Unfortunately, deepfakes are seeing more use in pornography. Women tend to be the victims of this kind of harassment, whether they’re a celebrity or not, and tragically, there aren’t many courses of action available to the victims. Our laws are barely sufficient for restitution against revenge porn, let alone deepfake porn.

However, when it comes to politics, we might not have much to worry about just yet. There’s a lot of flag waving and warnings about deepfakes influencing the election, but we’re 62 days away from the vote and it still hasn’t happened.

“If you were to ask me what the key risk in the 2020 election is, I would say it’s not deepfakes,” Kathryn Harrison, CEO of the coalition fighting digital misinformation, DeepTrust Alliance, told CNET. “It’s actually going to be a true video that will pop up in late October that we won’t be able to prove [whether] it’s true or false.”

So while deepfakes may be a problem in the future, it might not be the biggest issue we’ll have during the 2020 election.